I mostly stay away from individual stock investing. It's too risky, and I don't pretend to know better than the rest of the financial market. My "dumb money" mostly goes into broad market ETFs with low management fees. However, sometimes you just want to try your luck at the casino.

That's how I felt last November when I had a lump sum of money I needed to invest. Instead of putting all of it into a low-risk ETF, I decided to do a bit of research and choose some other investment options. Now, I'll admit I didn't pull up any of these company's financial records. I didn't do fundamental analysis. But I read some things—not just /r/wallstreetbets—and chose some stocks. So my choices were based on some data, but mostly vibes.

It's been exactly 12 months since I invested this money. I wanted to look back and see how these investment choices panned out. Of course, 1 year isn't super long in the grand scheme of market cycles, but it's a useful exercise nonetheless.

This also gives me a chance to do some data visualization, which I haven't done much before. I like Kotlin, so I decided to try out Kotlin Notebooks combined with Kandy, a graphing library natively supported by Kotlin Notebooks and Jetbrains IDEs. The complete code is on my GitHub if you're interested.

The actual dollar amount isn't relevant for this discussion, so let's just refer to it as D here.

Here's the breakdown of how I invested the D dollars.

val relativeAmounts = mapOf(

// disney

DIS to 5.17,

// mcdonalds

MCD to 6.24,

// intermediate-term treasury bonds ETF

VGIT to 19.52,

// solar technology ETF

TAN to 25.78,

// Nasdaq-100 tracking ETF

QQQ to 100 - 5.17 - 6.24 - 19.52 - 25.78 // about 43%

)

Let's visualize this breakdown:

So, I still put most of the money in ETFs, but only QQQ has broad market exposure. And VGIT is a bond ETF, which is generally considered a hedge against market downturns.

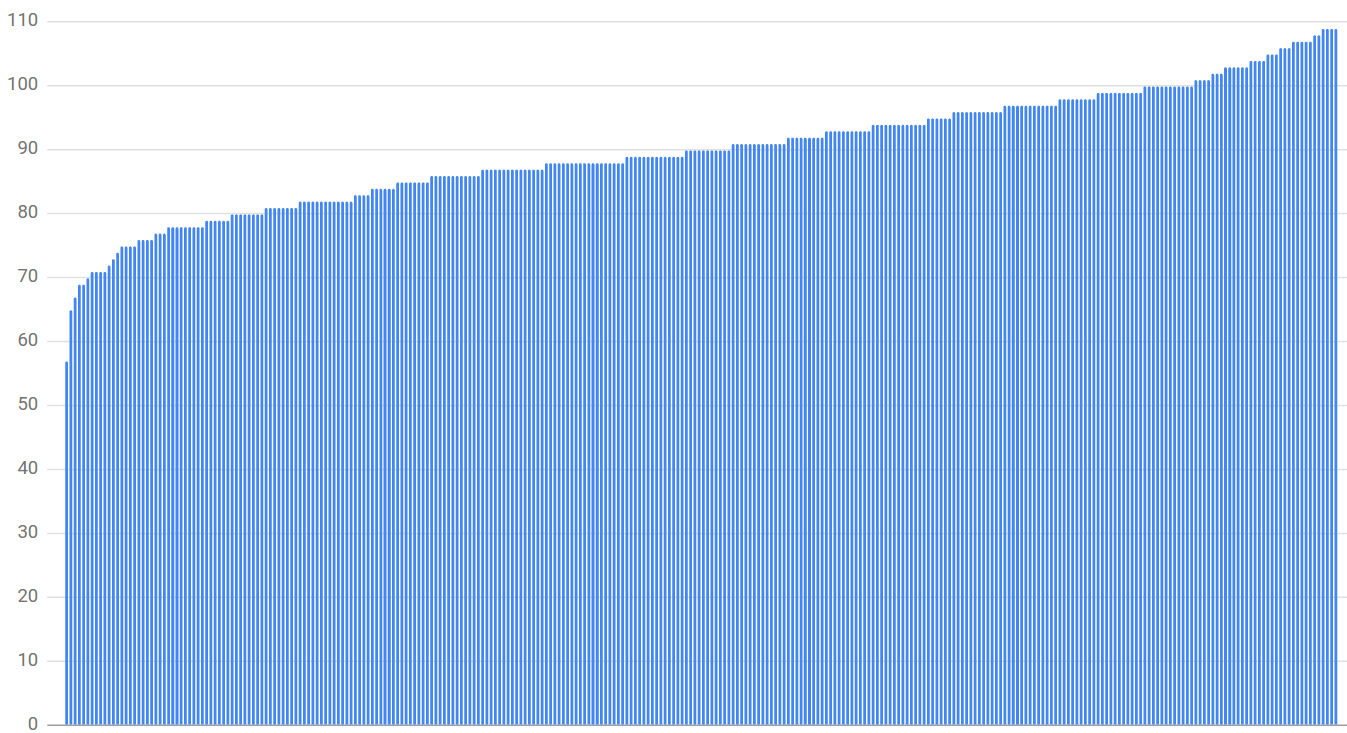

It's pretty straightforward to get historical price data for all these securities from the [Nasdaq website](nasdaq url). I downloaded the last 5 years of data for each of them, imported them into my Notebook, sanitized the data a bit, and then plotted the last 1.5 years on a graph:

// take only last 1.5 year (252 trading days in a year)

val NUM_DAYS: Int = 252 + 126

var dis = DataFrame.read("historical-price-data/disney.csv").formatStonks()

var mcd = DataFrame.read("historical-price-data/mcd.csv").formatStonks()

var tan = DataFrame.read("historical-price-data/tan.csv").formatStonks()

var vgit = DataFrame.read("historical-price-data/vgit.csv").formatStonks()

var qqq = DataFrame.read("historical-price-data/qqq.csv").formatStonks()

fun AnyFrame.formatStonks() = this

.remove("Volume", "Open", "High", "Low")

.take(NUM_DAYS)

.add("DateFmt") {

SimpleDateFormat("MM/dd/yyyy").parse(it["Date"] as String)

}

.add("Price") {

val price = it["Close/Last"]

// Some of the prices have a preceeding '$', some don't...

if (price is String) {

price.substring(1).toFloat()

} else {

price

}

}

.sortBy("DateFmt") // sort ascending order by date

.remove("Close/Last", "DateFmt")

The result is shown below, with a dashed line indicating the 11/30/2023 purchase date:

This gives a basic indication of how things went . QQQ did well. TAN did not. But a better comparison is, of course, the relative change instead of the absolute price of the stock. In other words, I want to visualize my return on each investment instead.

// November 30th, 2023 is the 126th day in the data

val NOV_30 = 126

fun List<Number>.getRelativeReturnAsPercent(): List<Float> = this.map { (it.toFloat() - this[NOV_30].toFloat()) / this[NOV_30].toFloat() * 100 }

val disReturn = disPrice.getRelativeReturnAsPercent()

val mcdReturn = mcdPrice.getRelativeReturnAsPercent()

val tanReturn = tanPrice.getRelativeReturnAsPercent()

val vgitReturn = vgitPrice.getRelativeReturnAsPercent()

val qqqReturn = qqqPrice.getRelativeReturnAsPercent()

val allReturns = dataFrameOf(

"Date" to datesX5,

"Return (%)" to disReturn + mcdReturn + tanReturn + vgitReturn + qqqReturn,

"Symbol" to symbols

)

allReturns.groupBy("Symbol")

.plot {

line {

x("Date")

y("Return (%)")

color("Symbol")

}

vLine {

xIntercept(listOf("11/30/2023"))

type = LineType.DASHED

}

}

Side-note: I found out halfway through this exercise that the graphing library, Kandy, is very much in development still. Current version is 0.7 and the developer documentation is incomplete. Plotting multiple series of data on the same graph is not supported well. You have to do some hacky list concatenation which was really lame. Don't think I'll use Kandy again until they've improved this.

This gives us a consistent comparison of each stock's performance since the buy date:

Here we see QQQ is up 31% right now, while TAN is down 20%. Everything else is somewhere in the middle.

I want to analyze how my investment choices fared—not just what I bought but also how much I bought, relative to the total D dollars I had. This is called the portfolio return. The formula to calculate it is straightforward since I'm only considering a single purchase date. You just multiply each investment's return by the relative amount invested and then sum them up. It's "weighting" the return by the percentage of your portfolio exposed to it, essentially.

val disWReturn = disReturn.map { it * relativeAmounts[DIS]!! / 100 }

val mcdWReturn = mcdReturn.map { it * relativeAmounts[MCD]!! / 100 }

val tanWReturn = tanReturn.map { it * relativeAmounts[TAN]!! / 100 }

val vgitWReturn = vgitReturn.map { it * relativeAmounts[VGIT]!! / 100 }

val qqqWReturn = qqqReturn.map { it * relativeAmounts[QQQ]!! / 100 }

// grab one of the lists' indices to get an array of day ordinals

val days = disWReturn.indices

val all = listOf(disWReturn, mcdWReturn, tanWReturn, vgitWReturn, qqqWReturn)

val portfolioWReturn = days

.map { index -> all

.map { it[index] }

.sum()

}

I plotted the overall portfolio return alongside each investment's portfolio weighted return:

This shows me how most of the portfolio's gains were due to QQQ, which is not surprising. It also shows how DIS and MCD barely affected my overall portfolio since I only invested about 5% of D in each.

Let's also plot the portfolio return alongside the absolute returns of each stock. I think this is more illuminating:

Here's where we really see how my investment choices balanced each other out in the aggregate. Fortunately, I still have a positive return after a year, mostly because the Nasdaq-100 (mostly tech) had a great year. But investing a quarter of my money in solar didn't pan out so well. It's not always sunny on Wall St.

Finally, just to drive the stake into my wallet further, here's my portfolio return plotted against just QQQ:

Overall, a difference of 31.1 - 10.4 = 20.7 percentage points missed out on because of my decision to cosplay as a day trader for fun.

To put that in perspective, if I had D = $10,000 dollars to invest last year, I would've had an additional $2070 in my pocket today. That's like, half a Taylor Swift ticket.

Oh well, lesson learned: Don't use this unfinished graphing library for data viz anymore. And probably stick to ETFs.